Overview

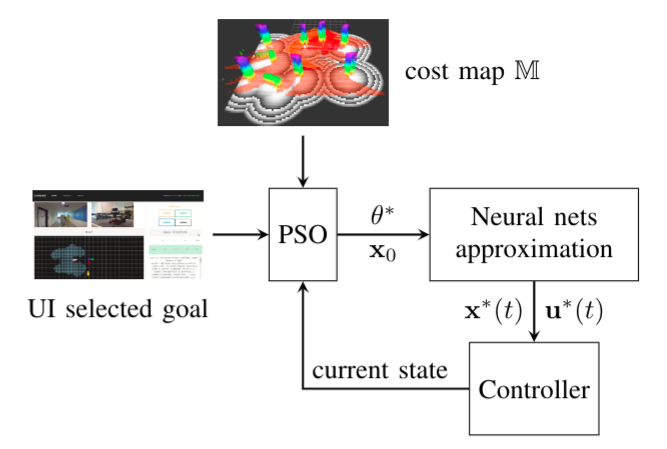

To concatenate with the state estimation and mapping modules, a 3D motion planning module is designed for real-time path and trajectory generation, where a collision-free and dynamically feasible reference trajectory is generated to guide vehicles maneuver safely towards its desired target.

We focus on the local planning problem, which is solved by Motion primitives (MPs), spanning a local search tree to abstract the continuous state space. We sample on the vehicles’ boundary state constraints and generate the actual motion by solving a boundary value problem. The generated MPs are termed as the boundary state constrained primitives (BSCPs).

we utilize the neural network (NN) to learn the BSCPs solutions offline and approximate them with NN during online optimization. A gradient-free method using the NN and the particle swarm optimization (PSO) is designed to construct a dynamically evolving single layer tree that gradually converges to the optimal local target in the planning. Complex and long-range motions can then be generated by combining the local target with a sampling based global planner. More information please refer to our internal report.

Usage

Installation

Please refer to System Overview page. The us_ws repository is for planning.

Nodes

Put at lease the following section in your launch file in order to use planning.

## mapping

<include file="$(find edt)/launch/edt_ddrone.launch" >

<arg name="system_id" value="$(arg system_id)"/>

</include>

## motion planning

<include file="$(find nndp_cpp)/launch/nndp_ddrone.launch">

<arg name="system_id" value="$(arg system_id)"/>

<arg name="ctrlpred_location" value="$(arg ctrlpred_location)"/>

</include>

## reference generator

<include file="$(find rt_ref_gen)/launch/refgen_ddrone.launch">

<arg name="ctrlpred_location" value="$(arg ctrlpred_location)"/>

If you want to use referece generator only, then add the third block only from the above code in your launch file. Please use the refgen_ddrone.launch as an example to design your own node. As the requirement vairies from different projects.

1. edt_node

Please check out the mapping page.

2. nndp_cpp_node

Subscribed topics

-

/cost_map (edt::CostMap)

cost map from edt_node

-

/dummy/pos_cmd (geometry_msgs::Point)

target waypoints

-

/engage_cmd (std_msgs::Bool)

init planning command

-

/rt_ref_gen/current_state (ommon_msgs::state)

reference feedback

-

/mavros/position/local (geometry_msgs::PoseStamped)

UAV current pose from mavros. Stop subscribing this once after taking off as the feedback will come from the generated reference directly.

Published topics

-

points2 (pcl::PointCloudpcl::PointXYZ)

publish 2d point clouds

-

points_sp (pcl::PointCloudpcl::PointXYZ)

publish stereo point clouds

-

/nndp_cpp/tgt_show (geometry_msgs::PoseStamped)

planned target

Important Parameters

-

/home_name (string)

The path of the trained network parameters and the reference generator policy files.

-

fly_height (float)

Take off height.

-

system_id (int)

Drone’s id.

3. rt_ref_gen_kerrigan

Subscribed topics

-

/task_manager/mpc_init_position_nwu (geometry_msgs::PoseStamped)

Initial pose to initialize the reference generator. This is normally the taking off pose.

-

/nndp_cpp/tgt (common_msgs::target)

Target points to generate reference.

Published topics

-

rt_ref_gen/current_state (common_msgs::state)

Generated reference

Important Parameters

-

/home_name (string)

The path of the trained network parameters and the reference generator policy files.

MPC policy generator and neural network training

The repo is matlab code for MPC policy generator and neural network training.

Data Explain

Here we only care about triple_integrator part for each modules

Dp_part: dynamic programming policy generator

-

horizontal

- pi_hor.mat(trippleIntegrator_hor.m ): policy generated from for horizontal control

- q_bins: position range from -x to x

- qdot_bins: velocity range from -y to y

- qdd_bins: acceleration range from -z to z

- PI: policy, what is the control input given certain state

- J: optimal value function J

- dt: dynamics discrete time (0.05)

-

vertical

- pi_ver.mat(from trippleIntegrator_ver.m): policy generated for height control.

- q_bins_ver

- qdot_bins_ver

- qdd_bins_ver

- PI_ver

- J_ver

- dt

-

dt.bin, q_bins.bin, qdot_bin.bin, qdd_bins.bin, PI.bin, J.bin are for trajectory generation cpp code.

Learning_part: prepare the data for training and validation

- training_data_hor.mat (from DataGenerator_hor.m): data prepared for horizontal training, including n states with 20s prediction and u_square_total.

training_data_hor{n,1} = [p v a]': initail state

training_data_hor{n,2} = S: planned 20s of predicted 40 points

training_data_hor{n,3} = u_sq_total: sum of u2 * dt for the total 40 points

- training_data_ver_small.mat (from DataGenerator_ver.m): data prepared for vertical network training, including n states with 20s prediction and u_square_total, same as training_data_hor.

- INISTATE.mat: save the initial state data from training_data_hor{n,1}

- TARGET.mat: save S and u_sq_total data from training_data_hor{n,2} and training_data_hor{n,3}

- NN_hor.mat: trained 4-layer network parameter from array_0 to array_7.

Policy change

To change the policy in MPC for trajectory generation, please follow the following steps. Take horizontal position control policy as an example:

- Open the file /nndp/DP_part/triple_integrator/trippleIntegrator_hor.m

- Find line 19-21, where you will see

q_bins = [-40:2:-12 -10:1:-5 -4.75:0.25:4.75 5:1:10 12:2:40];

qdot_bins = [-6:1:-5 -4.75:0.25:4.75 5:1:6];

qdd_bins = [-8:1:-5 -4.75:0.25:4.75 5:1:8];

Q_bins: position range from -40 to 40 Qdot_bins: velocity range from -6 to 6 Qdd_bins: acceleration range from -8 to 8

Just change the limits of these three arrays and run the function directly, you will get the policy in .mat format.

- Transfer the mat to bin file policy. Run saveThePolicy.m in the same folder, you will get the following bin files:

load pi_hor;

saveAsBin(dt,strcat(folderName,'/','dt','.bin'));

saveAsBin(PI,strcat(folderName,'/','PI','.bin'));

saveAsBin(q_bins,strcat(folderName,'/','q_bins','.bin'));

saveAsBin(qdd_bins,strcat(folderName,'/','qdd_bins','.bin'));

saveAsBin(qdot_bins,strcat(folderName,'/','qdot_bins','.bin'));

saveAsBin(J,strcat(folderName,'/','J','.bin'));

- Substitute these files under the /param/ctrlpred/pos_1/hor/ctrl folder in your project to apply the new policy.

Note: If you want to change the vertical position control policy, horizontal velocity control policy, vertical velocity control policy, please modify the same params in corresponding files, e.g. trippleIntegrator_ver.m, double_Integrator_hor.m, double_Integrator_ver.m.

To change the policy in MPC for target selection in path planning, you will need to retrain the network with the new policy. There is no ready to use scripts yet. I will have to write one for it.

Changes made for target tracking

-

reset the limits in trippleIntegrator_hor.m q_bins = [-40:2:-12 -10:1:-5 -4.75:0.25:4.75 5:1:10 12:2:40]; qdot_bins = [-6:1:-5 -4.75:0.25:4.75 5:1:6]; qdd_bins = [-8:1:-5 -4.75:0.25:4.75 5:1:8];

-

add punishment in dynamic models due to the resistant force caused by the high speed

for i=1:n

x_next(:,i)=A*x(:,i)+B*u(i);

coef = 0.2 * x(:,i).^2 .* sign(x(:,i));

if(i == 1) x_next(:, i) = x_next(:, i) - coef * dt^2/2; end

if(i == 2) x_next(:, i) = x_next(:, i) - coef * dt; end

end

-

Lower down the punishment on v Change Q from Q = diag([5 8 0.01]).*dt; to Q = diag([5 4 0.01]).*dt;

-

Put punishment on a only when v>6 instead of doing it from all the time

if abs(Xerr(2,i))>6

C(i)=C(i)+(abs(Xerr(2,i))-6)^2*20;

if abs(Xerr(3,i))>3

C(i)=C(i)+(abs(Xerr(3,i))-3)^2*30;

end

end

Retrain Policy

Vertical and horizontal are trained separately, so here we use the vertical in tripple_integrator as an example.

- Generate new policy

- Change the default folder to “nndp/DP_part/triple_integrator” and then run the command:

$ trippleIntegrator_ver(true)

Wait for about 1 minute till the error is below 0.01 or the iteration is larger than 1500. The following graph will be showing as the evaluation result of the generated policy: from top to down are jerk input, p, v, a. The initial value for is [p, v, a] = x = [-0.5 0 0]. The pi_ver.mat will be generated which contains: q_bins_ver qdot_bins_ver qdd_bins_ver PI_ver J_ver dt;

control input u in vertical

vertical policy

Run $trippleIntegrator_hor(true) as well to get horizontal policy pi_hor.mat will be generated which contains: pi_hor.mat q_bins qdot_bins qdd_bins PI J dt. The initial state [p, v, a] = [-9, 0, 0] in this example.

control input u in horizontal

horizontal policy

-

Save the generted policy in bin files (optional) Run $saveThePolicy then you will get the ctrl* folder with all the bin files including ver and hor policy subfolders.

-

Generate training data (Takes two hours for 200000 iterations. Depends on the size of the training data you wanted to create)

- Change current folder to “nndp/Learning_part/tripple_integrator” and find DataGenertion*.m file.

- Change the policy folder path as “load ../../DP_part/triple_integrator/pi_ver.mat”

- Run $Data_Genertion_ver_D for example to obtain the vertical training data. Similar steps for horizontal policy training data. Then the training_data_ver_small.mat or training_data_hor.mat will be generated.

- Get the right format to feed in the neural network.

- Run $loaddata.m to transfer data from training_data_ver_small.mat into INISTATE.mat and TARGET.mat, or from training_data_hor.mat into same format.

- Train the newly generated policy

- Run testPython.ipynb to train the network with generated data. Make sure the data path and key is valid.

- Obtain array_0 to array_7.npy network parameters after training.

- Evaluated the newly trained policy

- Run testNN in the array folder to get NN_*.mat

- Run compare to get the following figure, from which we can see the NN planed the trajectory is perfectly mimic the data generated from the policy.